Dev Blog - Gainspace

Research

We started this project by completing some user research via interviews with professors Clay Shirky and Dan Shiffman. The big takeaways from both of these interviews were: 1) education needs to be in service of community, 2) Effective learning environments are contingent on comfort, persistence, and comprehension of learners, and 3) educators don’t want a tool that will make them obsolete, but they’re all very excited by the prospect of a “tireless tutor” that can give immediate feedback on a concept.link to meeting notes

Coming away from this meeting, we were interested in trying to develop some sort of writing feedback tool, but weren’t sure how to start... that changed about 2 weeks later with the launch of Tres’ friend’s company, ES.AI. This project, which has already been picked up by a few incubators, uses a fine-tuned GPT-3 interface on high-quality proprietary data to provide feedback on user essays in a stylized, highly-controlled environment. This was a great idea, and we decided to “borrow” it through implementing our own custom datasets to a chat app in this way. Because we had spoken with Dan Shiffman, founder of popular YouTube channel The Coding Train, we got permission to use his book Nature of Code as well as transcripts of his many videos for use as GPT-3 training data. This process is still ongoing.

In addition to these interviews, we also interviewed 15 students to identify common pain points and high points in online learning. (more fun blog to come so soon I promise)

Logic

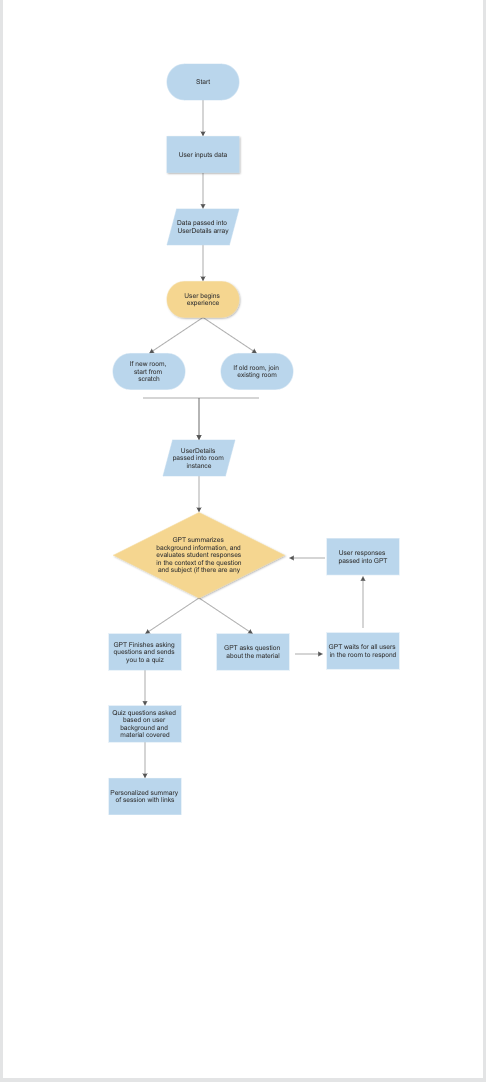

The program operates on an experimental multi-depth ChatGPT logic system which allows for both group GPT calls and individual GPT calls. The logic works as follows:

This is grainy because I don’t wanna buy SmartDraw. I will remake this soon. Essentially, user deets are passed into a server-side array that keeps track of your background and highest level of education achieved. Questions and answers in this array are proportional in difficulty to the highest level of education achieved and the user’s background.

Code

The first thing we had to do to get this thing working was create a version of the OpenAI API call that would work in a multi-user web server. To implement this, we made a server-side modification of the data structure that the API expects so that we can keep track of which user on the server is asking GPT a question. This ends up looking something like this: In this code (which would also work for single users), we pass in an array of all of our previous messages, along with the context of who sent them. We update the array in an updateChat function:

This code is pretty straightforward, simply appending our input messages onto a large list of messages. In this way, we know who’s sending what locally, and a single GPT instance can handle innumerable users by getting handed a bespoke array of messages based on who’s asking the questions. Very cool!

Quiz Code

After the session wraps, the user is sent to a personalized quiz. That code looks like this:

This code iterates through multiple code

Interface - progress 04/11

We our interface relatively straightforward, based on the UI of social work applications like Wonder.me or Zoom. When the user joins a server, they’re invited to join a room - upon joining, they’re paired with other users also in the room, and can communicate via audio/video and move their video around based on mouse coordinates. The border of the video is also audio-reactive, much like Zoom or Wonder.

Chats can be parsed based on both users and individual rooms, and then referenced later.

Interface - progress 04/18

We’ve added a register page to get an idea of who’s joining the class and what their interests are:

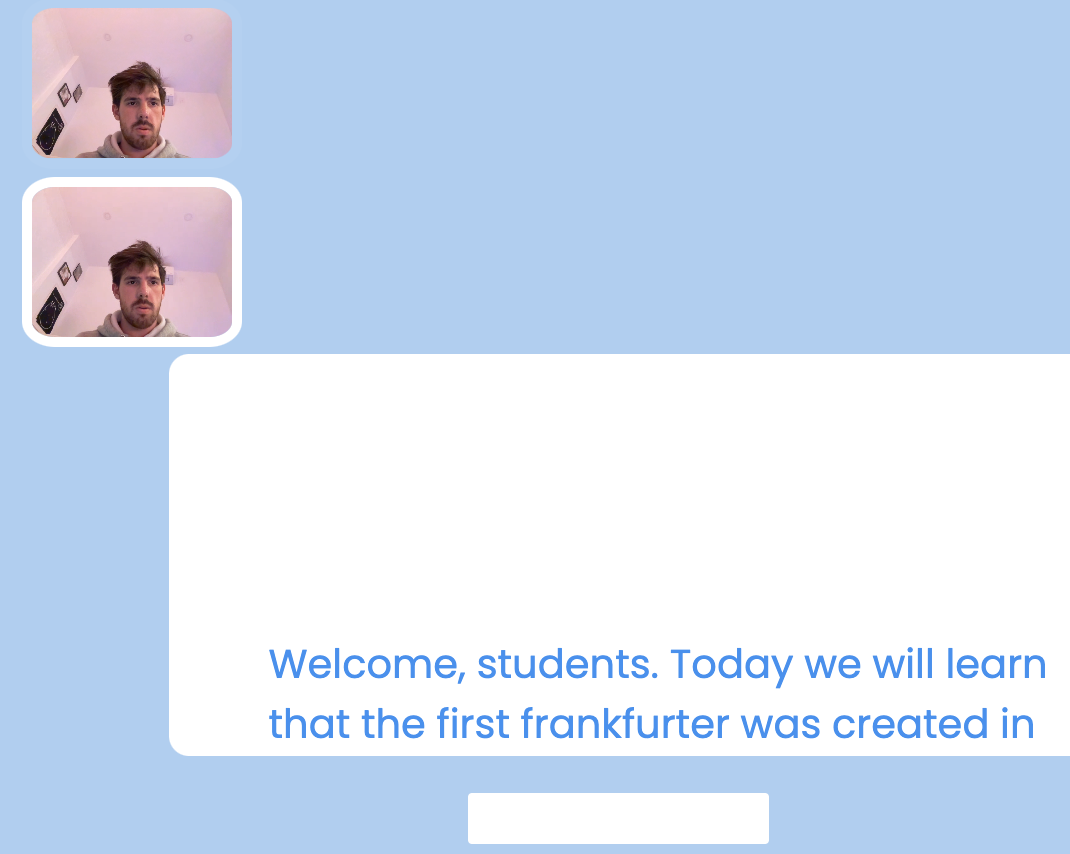

This interface includes options for name, personal + professional background, and subject of interest. This “subject of interest” is passed into a new HTML page as a room. From there, the user has a chance to start interacting with the LLM:

It’s worth mentioning that this room is WebRTC enabled, meaning that users can both see and speak to each other. They can interface with ChatGPT collectively until a certain number of questions have elapsed, at which point they’re directed to a personalized quiz. From here, they’re given a plan for next steps, at which point the most basic implementation of this educational tool ends for scoping reasons.

Later April

Fresher styling. Video summary works. Oh yeah.